|

Automatic Acquisition of

High-fidelity Facial Performances Using Monocular Videos

SIGGRAPH Asia 2014 Fuhao Shi* Hsiang-Tao Wu# Xin Tong# Jinxiang Chai* *Texas A&M University #Microsoft Research Asia

Abstract

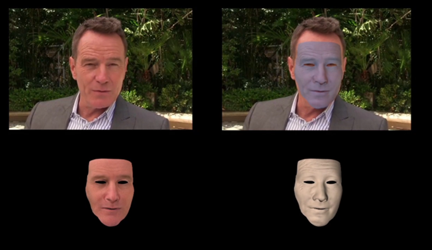

This paper presents a facial performance capture system that automatically captures high-fidelity facial performances using uncontrolled monocular videos (e.g., Internet videos). We start the process by detecting and tracking important facial features such as nose tip, eye and mouth corners across the entire sequence and then use the detected facial features along with multilinear facial models to reconstruct 3D head poses and large-scale deformations of the subject at each frame. We utilize per-pixel shading cues to add fine-scale surface details such as emerging or disappearing wrinkles and folds into large-scale facial deformations. At a final step, we iterate our reconstruction procedure on large-scale facial geometry and fine-scale facial detail to further improve the accuracy of facial reconstruction. We have demonstrated the effectiveness of our system under a variety of uncontrolled lighting conditions and overcoming significant shape differences across individuals including differences in expressions, age, gender and race. We show our system advances the state of the art in facial performance capture by comparing against alternative methods.

Video

Downloads

Bibtex

| |||||||||||||||||||||||||